Abstract

Gaussian scale spaces are a cornerstone of signal representation and processing, with applications in filtering, multiscale analysis, anti-aliasing, and many more. However, obtaining such a scale space is costly and cumbersome, in particular for continuous representations such as neural fields. We present an efficient and lightweight method to learn the fully continuous, anisotropic Gaussian scale space of an arbitrary signal. Based on Fourier feature modulation and Lipschitz bounding, our approach is trained self-supervised, i.e., training does not require any manual filtering. Our neural Gaussian scale-space fields faithfully capture multiscale representations across a broad range of modalities, and support a diverse set of applications. These include images, geometry, light-stage data, texture anti-aliasing, and multiscale optimization.

Idea

To construct our neural field, we pair a positional encoding in the form of Fourier features with a Lipschitz-bounded multi-layer perceptron (MLP). When dampening high encoding frequencies, the Lipschitz-bounded MLP is unable to compensate and produces an output missing high frequencies. Training this architecture on the original signal while randomly dampenening Fourier features thus forces the network to learn a continuous scale space of low-pass filtered versions of the signal, without requiring any manual filtering. At inference, filtered versions of the learned signal can be synthesized in a single forward pass using arbitrary variances or covariance matrices.

The following interactive demo demonstrates how closely our learned scale space (pink) resembles Gaussian smoothing (silver), and how crucial the Lipschitz constraint is in attaining this similarity:

Applications

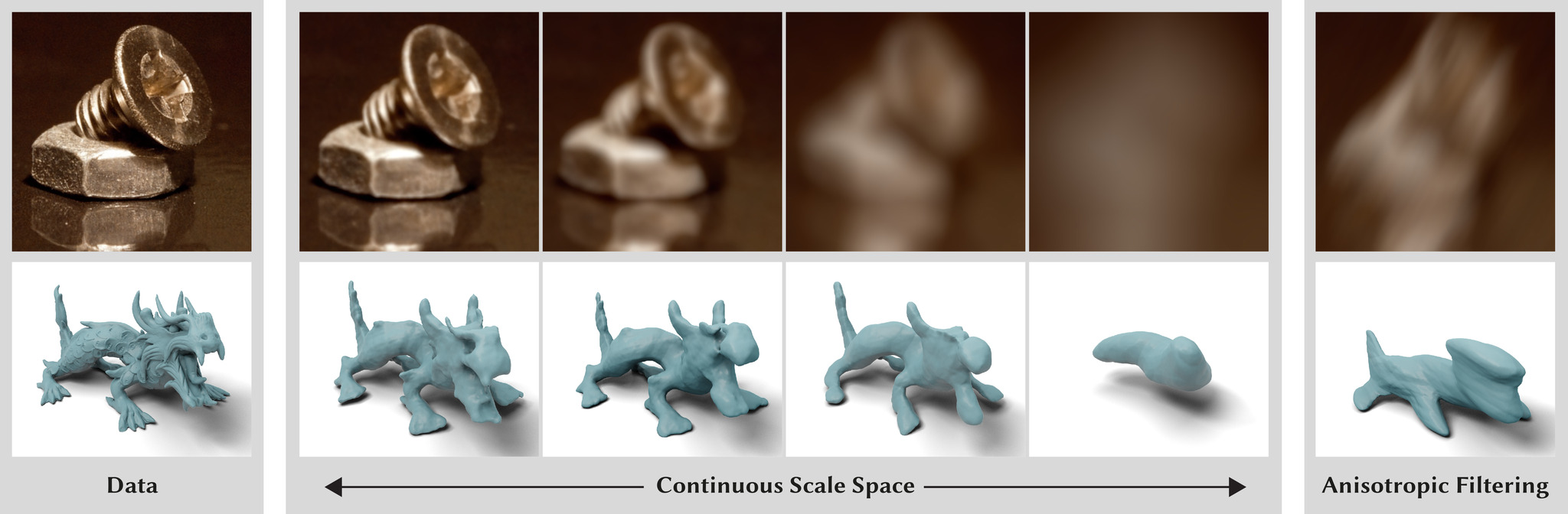

We now demonstrate how closely our approach mirrors real isotropic and anisotropic Gaussian smoothing obtained via costly Monte Carlo convolution on different modalities and applications.

Images

Images can be seen as 2D signals with 3 channels. Below, we show a continuous walk through the space of covariance matrices. We commence with an isotropic sweep from low to high variance, then transition to a purely vertical covariance ellipse, and finally rotate the covariance ellipse while changing its proportions and size. In the lower corners, we also provide Fourier spectra.

SDFs

Geometry can be represented continuously as the zero-level-set of a signed distance function (SDF). Below, we again show a continuous walk through the space of covariance matrices, commencing with an isotropic sweep, followed by anisotropic covariance matrices.

Light Stage

A light stage captures an object by illuminating it with one light at a time from all directions. We apply our method to the 4D product space of 2D pixel coordinates and 2D spherical light directions. In the following video, we first traverse through the subspace of light directions. We then increasingly smooth the light direction, but not the pixel coordinates. This incorporates neighboring lights and thus turns hard into soft shadows. Finally, we again traverse through the light directions while retaining the smoothing intensity.

Neural Texture

Texturing a 3D mesh requires resampling of a texture into screen space. To avoid aliasing, this resampling must account for spatially-varying, anisotropic minification and magnification. Our method enables this functionality by representing the 2D texture as continuous neural field, and then determining for each camera view and texture query the optimal anisotropic Gaussian kernel that results in alias-free resampling. We see that our approach is successful in removing aliasing artifacts from the rendering.

BibTeX

@article{mujkanovic2024ngssf,

title = {Neural Gaussian Scale-Space Fields},

author = {Felix Mujkanovic and Ntumba Elie Nsampi and Christian Theobalt and Hans-Peter Seidel and Thomas Leimk{\"u}hler},

journal = {ACM Transactions on Graphics},

year = {2024},

volume = {43},

number = {4}

}